Implementing the Green Iguana Detection Dashboard

1. Project Outline

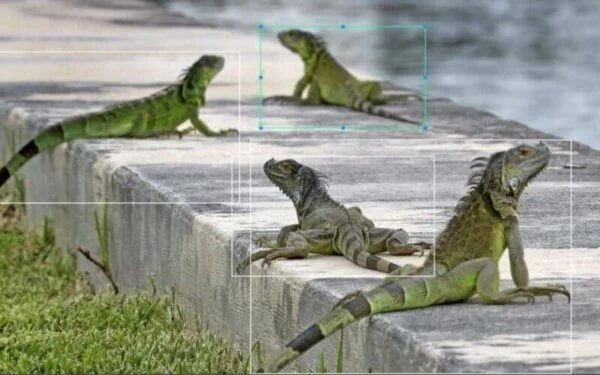

This page is a brief introduction to the public interest open source project "Green Iguana Individual Detection and Monitoring" in the Nvidia Jetson community. This project uses Jetson Nano with computer vision object detection model to detect the whereabouts and appearance of green iguanas in real time.

We also provide the Github repo of this open source project, so that readers can copy the model for rapid development when developing similar projects.

2. Project Motivation

What is a green iguana?

The American iguana, also known as the green iguana, is a large lizard that lives in trees. It can be up to 1 to 2 meters in length from head to tail and can live for more than 10 years. It feeds on leaves, buds, flowers, and fruits of plants. It is a diurnal reptile that can lay 24 to 45 eggs per litter. It has strong reproductive capacity and ability to adapt to the environment.

The severity of the ecological disaster caused by green iguanas

In Taiwan, abandoned green iguanas are rapidly reproducing in the wild. The Forestry Bureau of the Council of Agriculture has stated that the green iguana problem not only harms the ecology, its feeding on plants causes crop losses to farmers, and because green iguanas have the habit of digging holes, they often dig holes in riverbanks and fish ponds, damaging facilities. Based on the government's current surveillance, the whereabouts of green iguanas have spread to multiple counties and cities.

Establishing a Green Iguana Monitoring System

Thanks to the development of edge devices in recent years, we have been able to develop and deploy real-time computer vision applications to detect and monitor the whereabouts of green iguanas. Through the system's instant alarms and notifications, we can take measures to avoid asset losses caused by green iguanas.

Software Architecture

3. Data collection

Green iguana picture label

Image crawler

Using the Selenium dynamic web crawler, we collected about 5,000 green iguana pictures and labeled them one by one. We also provide downloads of images and label materials here. Please see the image labeling tutorial we have prepared.

Image Tags

In the multi-person labeling operation, we used the web-based object detection labeling tool makesense.ai because it is easy to use and does not require the installation of any software.

Instructions for using this tool can be found here.

4. Training a green iguana detection model using Nvidia TLT

Nvidia Transfer Learning Toolkit

Introduction to Nvidia Transfer Learning Toolkit (TLT)

Creating AI/ML models from scratch to solve business problems is an extremely costly process, so “transfer learning” is often used during project development to accelerate development. Transfer learning is a field of study in machine learning that focuses on storing existing problem-solving models, such as using pre-trained models, and applying them to other different but related problems.

The TLT (Transfer Learning Toolkit) provided by NVIDIA is a transfer learning toolkit, as the name suggests, which provides popular pre-trained models for image and natural language processing for transfer learning. The most important thing about TLT is that it is oriented towards deployment. Size reduction and optimization after model training are integrated into TLT's functions.

In the best case scenario, training a model using TLT only requires setting training parameters; there is no need to write code from scratch. TLT implements an end-to-end process of training, optimization, and deployment, which is very different from the general development process and greatly reduces the time and technical costs of project development.

Hardware requirements

The official recommended hardware configuration for running Nvidia TLT is:

- 32 GB system RAM

- 32 GB of GPU RAM

- 8 core CPU

- 1 NVIDIA GPU

- 100 GB of SSD space

Software Requirements

NVIDIA TLT installation tutorial

Here we provide a detailed NVIDIA TLT installation tutorial, please see the environment setting tutorial we prepared.

TLT model training and optimization

The object detection model for this project is YOLO v4. Please refer to this YOLO v4 training example code. After a slight modification, you can use green iguana pictures and labels to train the model.

Run this code and wait for the program to complete. It will automatically export a .etlt file, which is the model file we will eventually deploy.

PS. If you want to quickly get started with TLT's CV usage example code, you can find it in this official link. Almost all of them can be trained, optimized, and exported with one click by just slightly adjusting the parameters.

5. Deploy the model on the Jetson device

Introduction to Deepstream

After the model is trained, the next step is to deploy it on a Jetson device for real-time inference, while also streaming the images and inference results. We use Nvidia Deepstream, a framework designed for inference and data streaming.

DeepStream is an end-to-end pipeline that performs deep learning inference, image and sensor processing, and delivers insights to the cloud in streaming applications. You can build cloud-native DeepStream applications using containers and orchestrate them through the Kubernetes platform for large-scale deployment. When deployed at the edge, applications can communicate between IoT devices and the cloud using standard message brokers such as Kafka and MQTT for large-scale, wide-area deployments.

Introduction to TensorRT

NVIDIA TensorRT™ is an SDK for deep learning inference. The SDK includes a deep learning optimizer runtime environment that enables deep learning applications to provide low latency and high throughput. Before deploying the model, TensorRT is required to convert the model to speed up the inference process.

TLT is a deployment-oriented model training framework, which naturally includes conversion functions for trained models. Just use tlt-converter to build the TensorRT inference engine for the .etlt output model trained by TLT. In addition, the hardware and software deployed on the device need to be converted using the corresponding tlt-converter version. The following are all configuration options:

Deepstream environment installation

We recorded the steps for installing the Deepstream environment and the process of building the TensorRT engine for the .etlt model. It also includes methods for installing other packages required for this project, such as MQTT client, etc. For detailed installation steps, please refer to the environment setup tutorial we prepared.

Deepstream Run

deepstream_app/deepstream_mqtt_rtsp_out.py is the run configuration of deepsteam python binding. When the program performs object detection inference, it will stream the image via RTSP, send the inference result data to the MQTT broker, and store it in the database for later visualization.

6. Data Dashboard

Plotly Dash

Plotly Dash is a framework for interactive data visualization. You can use non-front-end languages to write a data visualization front-end page. At the same time, other sub-packages extended from this package greatly simplify the process of web page style and layout, which is very suitable for rapid development of web-based data visualization.

In addition, the live-update function provided by the package allows the web page to be continuously and dynamically updated, which is particularly suitable for the dashboard requirements of this project.

Dash Bootstrap Components

Bootstrap is an open source front-end framework for website and web application development, including HTML, CSS and JavaScript frameworks, providing typography, forms, buttons, navigation and various other components and Javascript extension kits, aiming to make the development of dynamic web pages and web applications easier.

Using Dash Bootstrap Components, you can directly inherit the framework defined by bootstrap and quickly configure the layout of the web page.

MQTT broker

Eclipse Mosquitto

Users can choose MQTT broker according to their preferences. In this project, we use Eclipse Mosquitto. After installation, just start the MQTT broker to start receiving data.

Database Construction

mySQL

This project uses mySQL to build the database, and users can choose the type of database they prefer. The purpose of the database is to write the data stream sent from the Jetson Nano to the MQTT broker during inference to the database, update the data regularly through Plotly Dash and present the latest data on the dashboard.

MQTT topic subscription and data writing to the database

When Deepstream is running on Jetson Nano, it continuously sends inference result data to a specific topic of the MQTT broker through the MQTT communication protocol. By setting up a subscriber for this specific topic, you can receive notifications when new data flows into a topic and write the data into the database. The program location is mqtt_topic_subscribe/mqtt_msg_to_db.py.

Running the web dashboard

- Deepstream deployment and operation

- MQTT broker runs on data server

- Database runs on the data server

With the above conditions, the web dashboard can start running. Please note that the Deepstream configuration is changeable, so in the future, if users want to replace the model with other object detection models, they only need to modify the Deepstream configuration file and the Deepstream python executable file.

Want to learn more? Click the button for more information!

More Related Articles

- Computer Perception

- Human-computer interaction

- What is AI Education? Eight ways to inspire children's interest in artificial intelligence and machine learning! - AI4kids

- High School Learning Experience: Artificial Intelligence Project Practice-Supervised Learning

- Machine Learning

- The beginning of the revolution in music artificial intelligence technology and creation from Chen Shanni’s AI Vocal single

- Computational Thinking

- Representation and Reasoning

- How will artificial intelligence develop in the next 10 years? What impact will it have on your life and mine?

- Understanding Nvidia Omniverse, the stunning SIGGRAPH demo

- If you were asked to use AI to create a fake news article - Talk to Transformer - AI4kids

- Explain what artificial intelligence is in one sentence?

- What is YOLO? Learn about the evolution of YOLO in 3 minutes and where it can be applied in life!

- Data-driven future smart healthcare: Where should doctors, governments and businesses go?

- Why should children learn artificial intelligence? - AI4kids